Why Ethical AI in Hiring Matters Today

You deserve a hiring process that values fairness and transparency. Ethical AI in hiring plays a vital role in achieving this goal. It ensures that recruitment decisions are free from bias and promotes trust between employers and candidates. For example:

Nearly 40% of companies using AI tools have reported bias in hiring, highlighting the need for ethical practices.

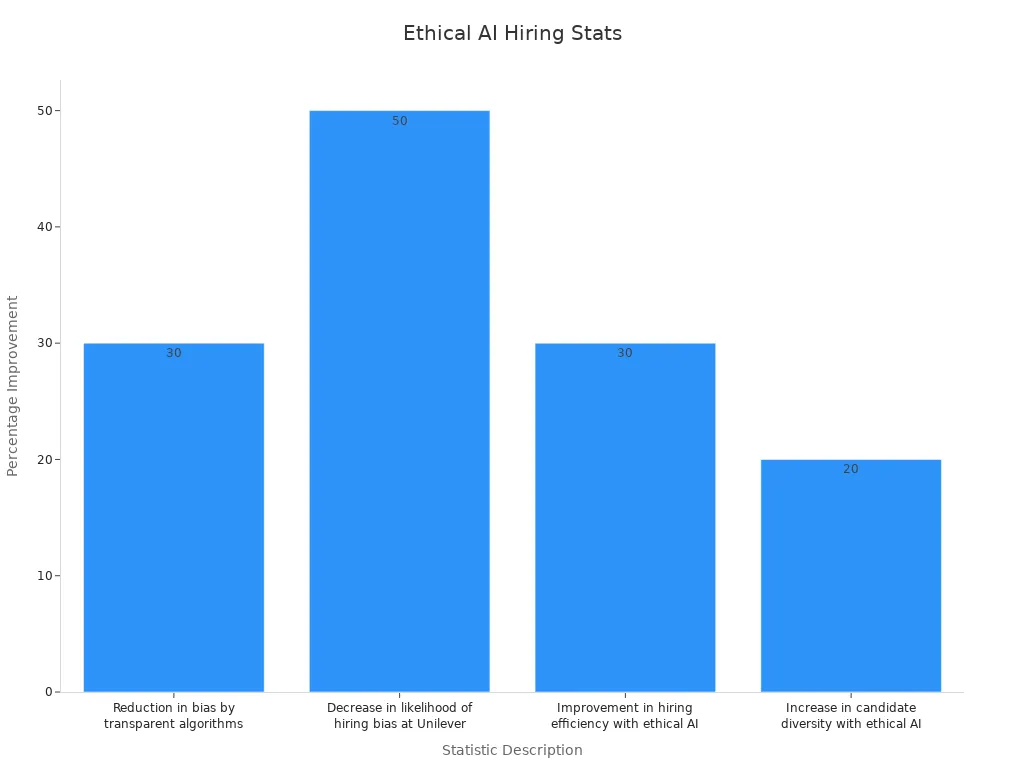

Transparent algorithms can reduce bias by up to 30%, leading to more diverse workplaces.

With 37% of adults identifying racial or ethnic bias as a major hiring issue, ethical AI offers a solution to create equitable opportunities for all.

Key Takeaways

Ethical AI makes hiring fair by treating all candidates equally.

Clear algorithms can cut hiring bias by 30%, creating diverse teams.

Checking AI systems often helps find and fix unfairness.

Using varied data in AI stops unfair treatment of some groups.

Adding human checks to AI builds trust and ensures fairness.

What Is Ethical AI in Hiring?

Defining Ethical AI in Recruitment

Ethical AI in hiring refers to the responsible use of artificial intelligence in recruitment processes to ensure fairness, transparency, and compliance with legal standards. It focuses on creating systems that evaluate candidates without bias and provide clear, explainable decisions.

Key features that define ethical AI in hiring include:

Feature | Description |

|---|---|

Transparency | Clear communication of AI decision-making processes to candidates, ensuring they understand evaluations. |

Bias Mitigation | Regular audits and diverse training datasets to reduce inherited biases from training data. |

Regulatory Compliance | Adherence to laws like the EU’s Artificial Intelligence Act and U.S. state laws on hiring transparency. |

These features ensure that AI tools align with ethical principles, fostering trust between employers and candidates. For example, companies using fairness-aware algorithms and conducting bias audits have reported a 48% reduction in hiring bias.

The Importance of Ethical AI in Modern Hiring

Ethical AI in hiring plays a crucial role in shaping equitable recruitment practices. When implemented correctly, it can improve hiring efficiency by 30% and increase candidate diversity by 20%. Companies like Unilever have demonstrated this by reducing hiring bias by 50% through ethical AI frameworks.

However, the risks of unethical AI highlight its importance. Amazon's AI recruiting tool, for instance, was abandoned after it penalized resumes with terms associated with women. This case underscores how reliance on biased historical data can perpetuate discrimination.

Organizations using ethical AI also benefit from improved compliance with laws and regulations. For instance, adhering to GDPR and CCPA ensures data privacy and security, while explainable AI methodologies provide insights into hiring decisions. These practices not only reduce legal risks but also build trust with candidates.

By prioritizing ethical AI, you can create a recruitment process that values fairness, transparency, and inclusivity. This approach not only benefits candidates but also strengthens your organization's reputation and long-term success.

Benefits of Ethical AI in Hiring

Ensuring Fairness in Recruitment Processes

Ethical AI in hiring ensures that every candidate gets a fair chance during recruitment. By using standardized criteria, AI tools evaluate applicants consistently, eliminating favoritism or subjective judgments. For example, Unilever's AI-driven recruitment process screens over 1.8 million applicants annually, applying the same standards to all candidates. This approach not only improves fairness but also enhances efficiency.

Transparent algorithms also play a key role in promoting fairness. Studies show that such algorithms can reduce bias by up to 30%. By clearly explaining how decisions are made, these systems help candidates understand the evaluation process, fostering trust and confidence in the hiring system.

Reducing Bias in AI Algorithms

AI algorithms can unintentionally inherit biases from the data they are trained on. Ethical AI addresses this issue by identifying and removing problematic data points. For instance, MIT researchers developed a method to detect and eliminate training examples that contribute to biased outcomes. This technique reduces bias without compromising accuracy, ensuring fairer results for underrepresented groups.

Companies also conduct regular AI audits to monitor and improve algorithm performance. These audits assess the reliability of AI tools and ensure they align with fairness principles. By implementing such measures, organizations can significantly reduce the likelihood of biased hiring decisions.

Evidence Type | Description |

|---|---|

Research Article | Continuous monitoring and diverse data collection reduce algorithmic bias. |

Article | Fairness measures promote balanced outcomes and reflect diverse perspectives. |

Enhancing Compliance with Employment Laws

Ethical AI in hiring helps organizations comply with employment laws and regulations. For example, New York City Local Law 144 requires bias audits for AI hiring tools. Companies like HireVue have conducted these audits to ensure their systems are free from race and gender bias. This proactive approach aligns with legal requirements and promotes ethical practices.

Data privacy is another critical aspect. Employers must ensure that AI systems protect sensitive information and comply with regulations like GDPR. By adhering to these standards, organizations can avoid legal risks and build trust with candidates.

Compliance Area | Description |

|---|---|

Data Privacy | Protects sensitive employee data under GDPR regulations. |

Bias and Discrimination | Implements fairness measures to prevent biased outcomes. |

Employment Laws | Ensures adherence to laws on discrimination, wages, and hiring practices. |

By prioritizing compliance, you can create a recruitment process that is both ethical and legally sound.

Building Trust Between Employers and Candidates

Trust forms the foundation of any successful hiring process. Ethical AI in hiring strengthens this trust by creating a transparent and fair recruitment experience. When candidates feel confident that they are evaluated based on merit, they are more likely to engage positively with your organization.

Transparency plays a key role in building this trust. AI systems that clearly explain how decisions are made help candidates understand the process. For example, organizations with transparent AI systems have seen a 35% drop in complaints about bias during hiring. This clarity reassures candidates that the system treats them fairly.

Reducing bias also enhances trust. Many job seekers worry about discrimination in AI-driven hiring. A 2021 study found that 78% of candidates expressed concerns about bias in these processes. Ethical AI addresses this by using diverse datasets and conducting regular audits. These measures ensure that the system evaluates all candidates equally, fostering a sense of fairness.

Improved candidate satisfaction is another outcome of ethical AI. When candidates perceive the process as fair and unbiased, they feel more valued. This satisfaction not only builds trust but also enhances your organization's reputation. Candidates are more likely to recommend your company to others, creating a positive cycle of trust and engagement.

By prioritizing ethical AI in hiring, you can create a recruitment process that values fairness and transparency. This approach not only benefits candidates but also strengthens your relationship with them, ensuring long-term success for your organization.

Risks of Unethical AI in Hiring

The Impact of Biased Algorithms on Recruitment

Unethical AI in hiring can lead to biased recruitment outcomes, which undermine fairness and diversity. Algorithms trained on biased historical data often replicate and amplify societal inequities. For example:

A Microsoft study revealed that AI linked men to programming roles and women to homemaking, reinforcing stereotypes.

Amazon's AI recruitment tool discriminated against female candidates because it was trained on male-dominated datasets.

These examples highlight how biased algorithms can favor privileged groups while excluding qualified candidates from underrepresented backgrounds. This not only limits diversity but also causes companies to miss out on top talent. Experts emphasize that algorithms reflect the biases in their training data, making it impossible for AI to eliminate discrimination without human intervention.

Transparency Challenges in AI-Driven Decisions

Transparency is a critical issue in AI-driven hiring. Many candidates feel frustrated when they don’t understand how decisions are made. Studies show that:

Statistic | Percentage |

|---|---|

Candidates receiving no feedback after screening | |

Candidates receiving no feedback after rejection | 69.7% |

The lack of feedback creates a perception of unfairness and reduces trust in the hiring process. Complex AI systems often function as "black boxes," where decision-making processes are too intricate to explain. This opacity can make decisions seem arbitrary, leading to complaints and disengagement. Providing clear explanations for hiring outcomes can significantly improve trust and candidate satisfaction.

Legal and Reputational Risks for Companies

Unethical AI practices expose companies to legal and reputational risks. Employers may face lawsuits under laws like the Americans with Disabilities Act and Title VII of the Civil Rights Act if AI systems produce biased outcomes. For instance, biased algorithms can hinder diversity efforts, damaging an employer's brand and employee satisfaction. In severe cases, companies may face costly investigations or public backlash.

By addressing these risks, you can protect your organization from legal troubles and maintain a positive reputation in the competitive hiring landscape.

Best Practices for Implementing Ethical AI in Hiring

Conducting Regular AI Audits

Regular AI audits are essential to ensure fairness and accuracy in hiring processes. These audits help identify and correct biases in AI systems, ensuring they align with ethical standards. For example, New York City mandates annual AI bias audits to prevent discrimination in hiring. Companies like HireVue have embraced this practice, conducting hundreds of bias audits to comply with regulations and improve their systems.

Audits also play a critical role in addressing issues like biased training data and flawed algorithms. Amazon's AI recruitment tool, which favored male candidates, highlights the importance of ongoing evaluations. By implementing regular auditing protocols, you can detect and resolve such problems before they impact hiring outcomes.

To enhance the effectiveness of audits, consider these best practices:

Collaborate with cross-functional teams, including HR, legal, and compliance experts, to ensure comprehensive evaluations.

Establish clear documentation standards for algorithm design and testing to maintain transparency.

Develop escalation procedures to address ethical concerns promptly.

Using Diverse and Inclusive Data Sets

AI systems rely on data to make decisions, so using diverse and inclusive datasets is crucial. Without this, AI may perpetuate existing biases. For instance, women earn only 26% of science and math degrees, and Black students account for just 5% of science and engineering graduates. These disparities can skew AI outcomes if not addressed.

By incorporating diverse data, you can create systems that evaluate candidates more fairly. Algorithms designed with diversity constraints can help companies interview a broader range of candidates. Adjusting candidate priority indices to focus on underrepresented groups also leads to more equitable hiring outcomes.

Experts emphasize the importance of diverse teams in AI development. Varied perspectives result in stronger, fairer technology. Regularly reviewing and updating datasets ensures your AI systems reflect societal values and promote inclusivity.

Incorporating Human Oversight in AI Decisions

Human oversight is a cornerstone of ethical AI in hiring. It ensures accountability and aligns AI decisions with ethical standards. For example, humans can review AI recommendations to accept, modify, or override them when necessary. This approach prevents errors and ensures decisions reflect societal values.

Continuous monitoring of AI systems is another critical practice. Teams should watch for anomalies in hiring decisions and address them promptly. Ethical review boards can also assess AI models for biases and ethical implications, providing an additional layer of accountability.

By incorporating human oversight, you can build trust in your hiring process. Candidates feel more confident knowing that humans, not just machines, are involved in evaluating their applications. This practice strengthens your organization's reputation and ensures fairer outcomes.

Ensuring Transparency and Explainability in AI Systems

Transparency and explainability are essential for building trust in AI-driven hiring systems. When you understand how decisions are made, you feel more confident in the fairness of the process. AI systems that clearly explain their decision-making steps ensure that candidates know why they were selected or rejected. This clarity fosters trust and reduces frustration.

A lack of transparency can harm your confidence in the hiring process. Research shows that when AI systems fail to provide detailed feedback, applicants often feel the process is unfair. This undermines trust and discourages engagement. By enhancing the interpretability of AI systems, companies can create a fairer and more transparent recruitment experience.

Transparency is not just an ethical responsibility; it is also a legal requirement. Regulations like the GDPR include a "right to explanation," which allows you to seek clarity on algorithmic decisions that affect you. In hiring, this ensures that companies remain accountable for their AI systems. When organizations prioritize explainability, they align with these legal standards and demonstrate their commitment to fairness.

To achieve transparency, companies can implement several practices:

Provide clear feedback: Share specific reasons for hiring decisions to help candidates understand the process.

Use explainable AI models: Choose systems designed to offer insights into their decision-making.

Train hiring teams: Equip staff with the knowledge to interpret and communicate AI outcomes effectively.

By prioritizing these steps, organizations can create a hiring process that values fairness and accountability. When you see transparency in action, you are more likely to trust the system and engage positively with the company.

Ethical AI in hiring is essential for creating fair and transparent recruitment processes. It enhances efficiency, reduces bias, and promotes diversity in the workforce. For example, companies like Unilever and IBM have successfully implemented AI systems that focus on fairness and inclusivity, improving hiring outcomes. However, challenges like discrimination risks and the need for accountability highlight the importance of ethical implementation.

To lead in responsible hiring, you must adopt ethical practices. Regular audits, diverse datasets, and human oversight ensure AI tools align with fairness principles. By taking these steps, you can build trust, comply with legal standards, and create a more equitable hiring process.

FAQ

What is the main goal of ethical AI in hiring?

Ethical AI in hiring ensures fairness, transparency, and inclusivity. It evaluates candidates based on merit while reducing bias. This approach creates equal opportunities for all applicants and builds trust between you and potential employers.

How can you tell if a company uses ethical AI in hiring?

Look for transparency in their hiring process. Ethical companies often explain how AI evaluates candidates. They may also mention regular audits, diverse datasets, or compliance with laws like GDPR. These practices show their commitment to fairness.

Tip: Ask about their AI policies during interviews to learn more.

Why do AI systems sometimes show bias in hiring?

AI systems learn from historical data. If the data contains biases, the AI may replicate them. For example, if past hiring favored one group, the AI might continue that trend. Regular audits and diverse datasets help fix this issue.

Can ethical AI completely eliminate bias in hiring?

No, but it can significantly reduce it. Ethical AI minimizes bias by using diverse data and human oversight. While it cannot guarantee perfection, it creates a fairer and more transparent hiring process.

How does ethical AI benefit you as a candidate?

Ethical AI ensures you are evaluated fairly, based on your skills and qualifications. It reduces the risk of discrimination and provides transparency in hiring decisions. This approach builds trust and increases your confidence in the recruitment process.

Note: Ethical AI also helps create diverse workplaces, benefiting everyone involved.

See Also

Leveraging AI For Fair And Impartial Hiring Processes

How AI Recruitment Tools Transform Contemporary Hiring Approaches

Enhance Your Hiring Strategy Using MokaHR's AI Solutions

The Role Of AI Software In Developing Predictive Hiring Models

From recruiting candidates to onboarding new team members, MokaHR gives your company everything you need to be great at hiring.

Subscribe for more information